Requirements for RiffPod:

- Receive UDP packets from the device, convert them audio samples and save them as WAV files

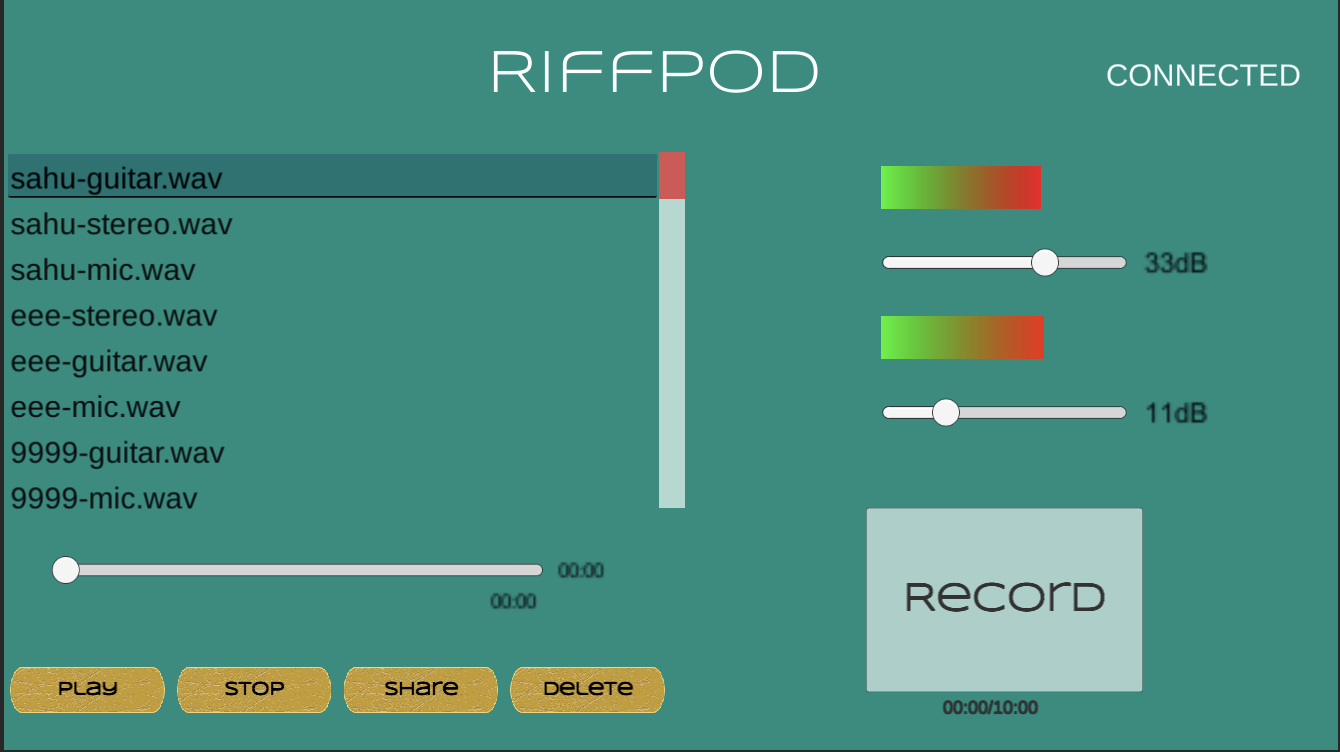

- Playback, manage and share the saved recordings

- Show connectivity status

- Show audio signal strength

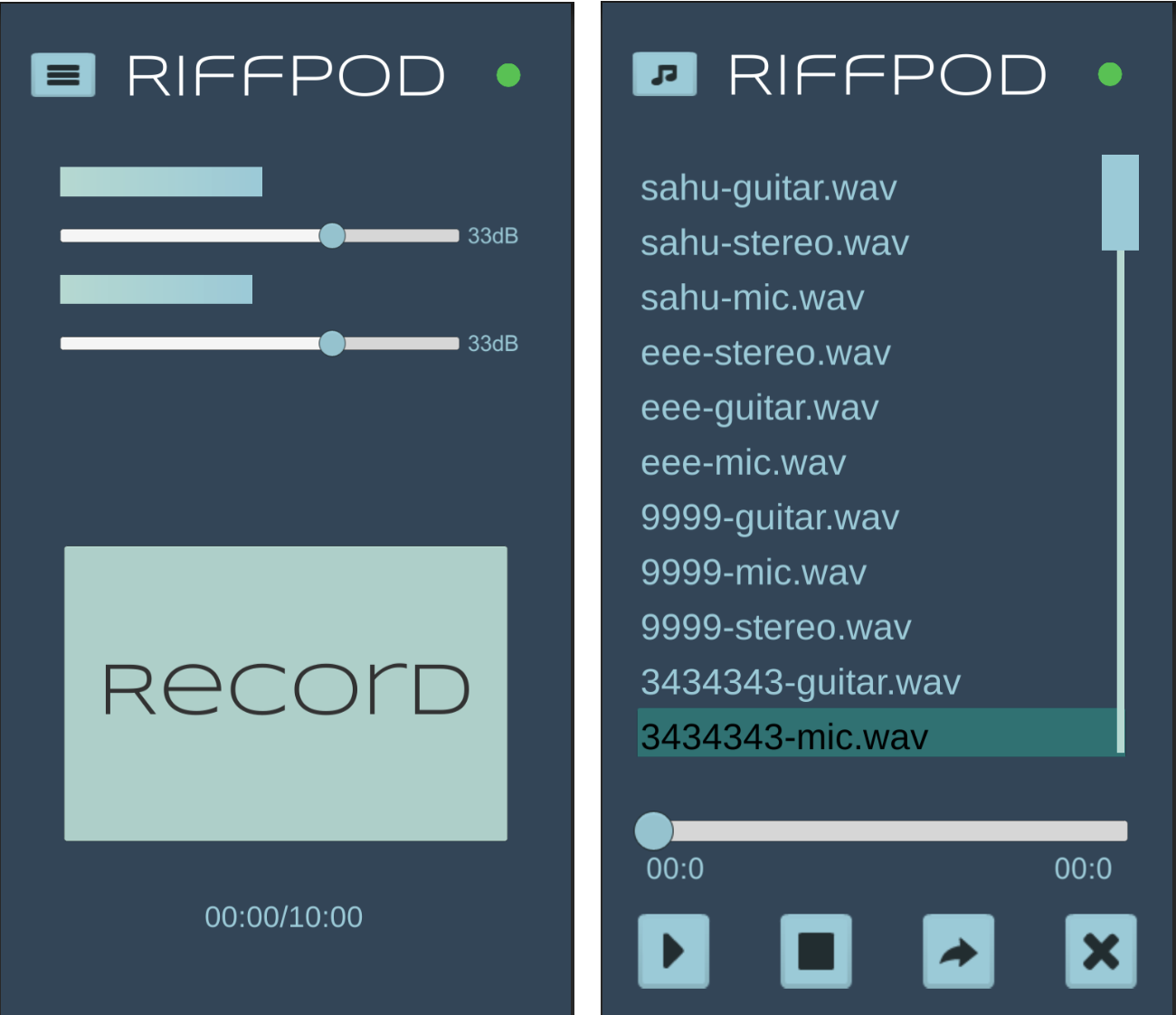

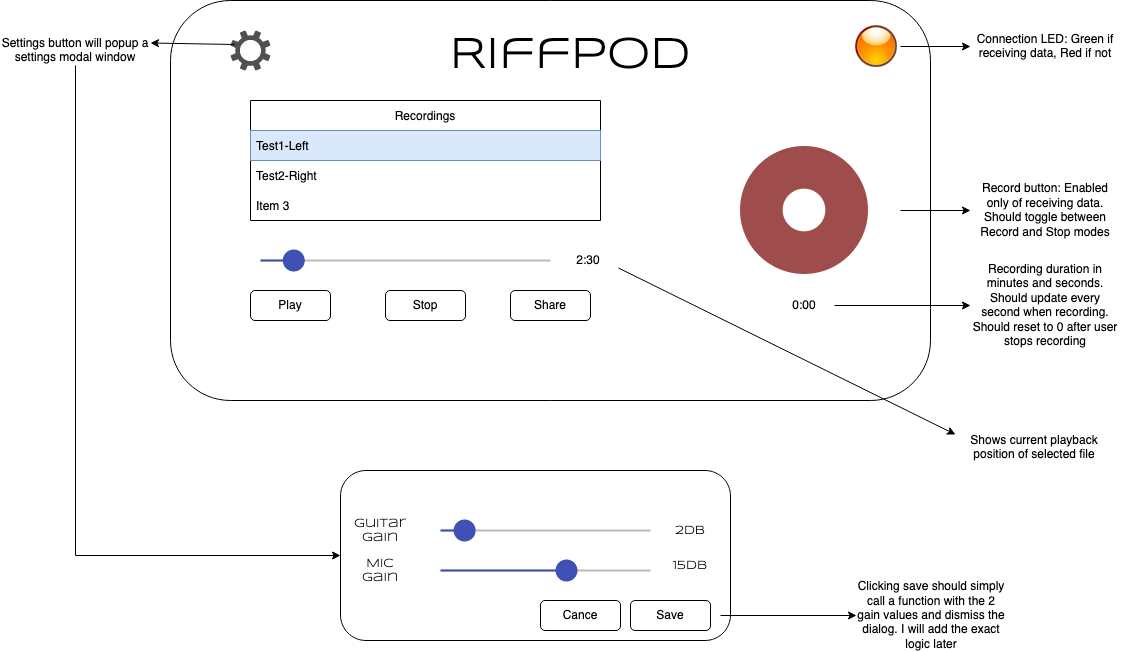

Original design spec:

Simulator

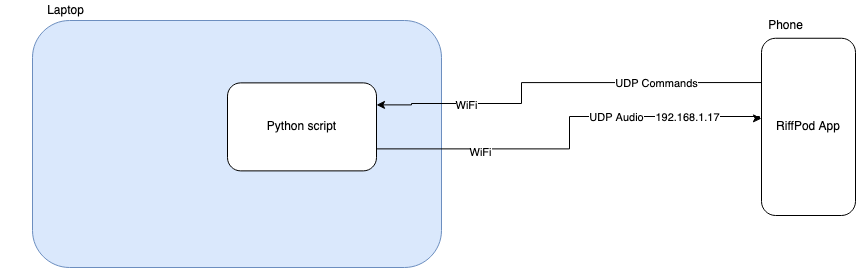

I created a Python script that generates a sinusoidal audio wave, converts it to UDP packets using the same format as the RiffPod hardware and streams to the app. This made it much easier to test, without needing the hardware.

Video showing the simulator in action with the first version of the Unity-based app:

App Architecture

A background thread binds to a UDP socket, listening for audio data from the device/Python simulator. Data is saved to an audio buffer in memory.

The audio buffer length is 10 minutes. This means a single recording is limited to a maximum of 10 minutes in length. TO-DO: Remove this limitation by introducing a circular buffer and another consumer thread that would write the data to disk.

Everything else happens on the main UI thread, leveraging Unity's Start and Update callbacks and input event handling. User taps the Record button, record timer starts ticking, data gets stored in the audio buffer. User taps the Stop button, gets prompted for the recording file name, data gets saved as WAV files in the app's persistent data folder. RiffPod is a 2-channel device i.e. it supports streaming audio from two instruments; for e.g. a guitar and a microphone. Three WAV files get created for every recording - the microphone audio, the guitar audio and stereo file with both microphone and guitar.

App UI

I tried to cram everything together into a single screen, which forced a landscape layout. This required the user to user both hands, which was awkward when you had a guitar and/or mic to manage as well.

Switched to portrait, with separate screens for recording and file management.